Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

By participating in the NorthStar Community, you agree to the following code of conduct.

A primary goal of Project NorthStar is to think and work in collaboration, so we can transcend the potential of each of us, and others. We aim to be inclusive to the largest number of contributors, with the most varied and diverse backgrounds possible. We are committed to providing a friendly, safe, and welcoming environment for all, regardless of gender, sexual orientation, skills and education, ability, ethnicity, socioeconomic status, and religion (or lack thereof). This Code of Conduct outlines our expectations for all those who participate in our community, as well as the consequences for unacceptable behavior. We invite all those to help us create safe and positive experiences for everyone, and we also include ways for our attendees to report any violations. Together we can make a fun, harassment-free, magical experience for everyone, regardless of gender, gender identity, sexual orientation, disability, physical appearance, body size, race, or religion.

A supplemental goal of this Code of Conduct is to increase open [source/culture/tech] citizenship by encouraging participants to recognize and strengthen the relationships between our actions and their effects on our community. Communities mirror the societies in which they exist, and positive action is essential to counteract the many forms of inequality and abuses of power that exist in society. If you see someone who is making an extra effort to ensure our community is welcoming, friendly, and encourages all participants to contribute to the fullest extent, we want to know..

The following behaviors are expected and requested of all community members:

Communicate! Participate in an authentic and active way. In doing so, you contribute to the health and longevity of this community.

Exercise consideration and respect in your speech and actions. Come from a place of understanding.

Attempt collaboration before a conflict.

Refrain from demeaning, discriminatory, or harassing behavior and speech.

Be mindful of your surroundings and your fellow participants. Alert community leaders if you notice a dangerous situation, someone in distress, or violations of this Code of Conduct, even if they seem inconsequential.

The following actions are considered harassment and are unacceptable within our community:

Being a jerk. Being mean. Unkind intentions.

Violence, threats of violence or violent language directed against another person.

Sexist, racist, homophobic, transphobic, ableist or otherwise discriminatory jokes and language.

Posting or displaying sexually explicit or violent material, posting or threatening to post other people’s personally identifying information ("doxing").

Don't be that person. We help people help people.

Unacceptable behavior from any community member, including sponsors and those with decision-making authority, will not be tolerated. Anyone asked to stop unacceptable behavior is expected to comply immediately. If a community member engages in unacceptable behavior, moderators may take any action they deem appropriate, up to and including a warning, temporary ban, or permanent expulsion from the community/event without warning. You can make a report either personally or anonymously.

Anonymous Report

We have an anonymous report form available . We can't follow up on an anonymous report with you directly, but we will fully investigate it and take whatever action is necessary to prevent a recurrence.

This code of conduct was adopted from the code of conduct used by the wonderful people at the .

Personal insults, particularly those related to gender, sexual orientation, race, religion, or disability.

Inappropriate photography or recording. Inappropriate physical contact. You should have someone’s consent before touching them.

Unwelcome sexual attention. This includes sexualized comments or jokes; inappropriate touching, groping, and unwelcome sexual advances.

Deliberate intimidation, stalking or following (online or in person).

Advocating for, or encouraging, any of the above behavior.

Sustained disruption of community events, including talks and, presentations.

The future of spatial computing deserves to be open.

We envision a future where the physical and virtual worlds blends together into a single magical experience. At the heart of this experience is hand tracking, which unlocks interactions uniquely suited to virtual and augmented reality. To explore the boundaries of interactive design in AR, we created and open sourced Project North Star, which drove us to push beyond the limitations of existing systems." -Leap Motion

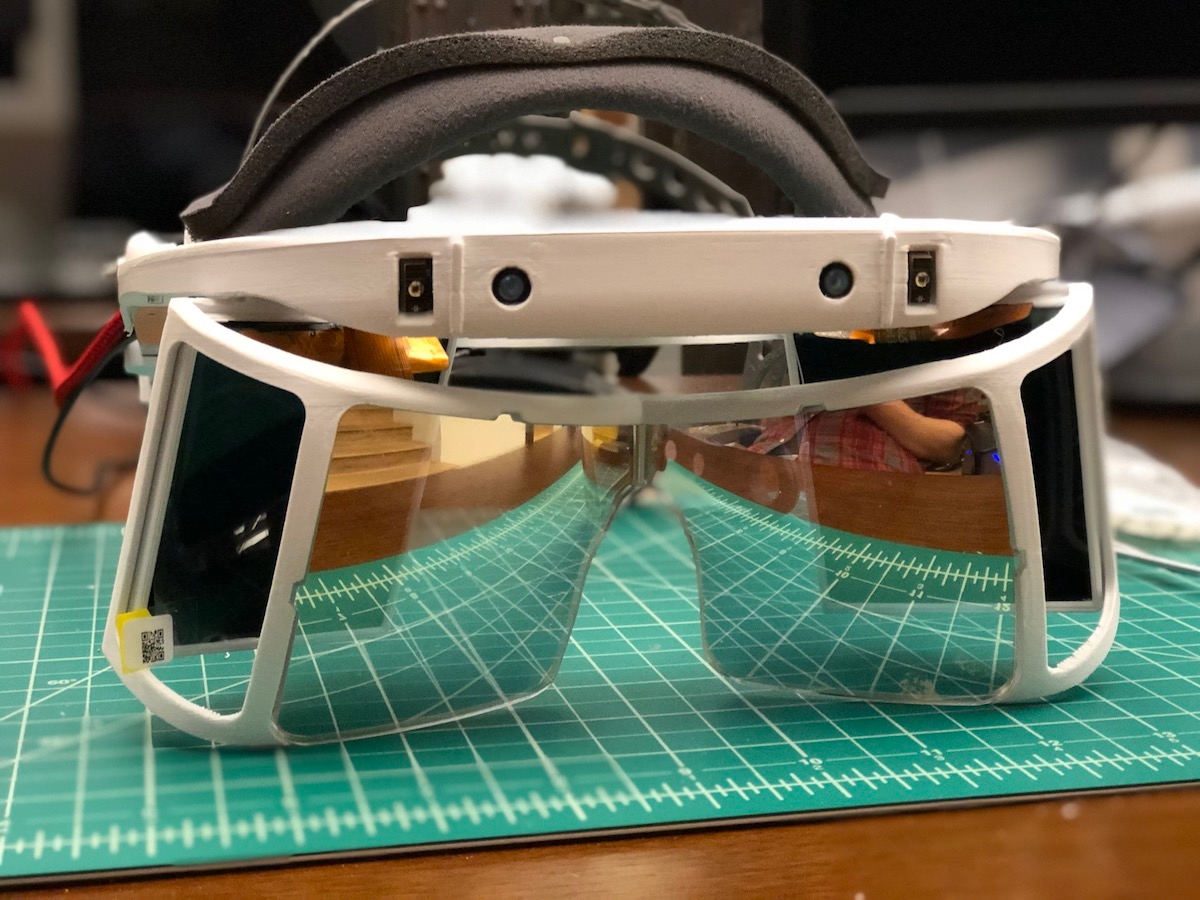

Project North Star is an open-source Augmented Reality headset originally designed by LeapMotion (now UltraLeap) in June 2018. The project has had many variations since its inception, by both UltraLeap and the open-source community. Some of the variations are documented and linked to here but visit the discord server for more to-the-moment information. The headset is almost entirely 3D printable, with a handful of components like reflectors, circuit boards, cables, sensors, and screws that need to be sourced separately.

Project North Star at MIT Reality Hack 2020, photo credit:

There's also a large community of Northstar developers and builders on Discord, you can join the server and share your build, ask questions, or get help with your projects by

For a more detailed look into the project, checkout our General FAQ, or Mechanical pages.

Project North Star has seen its fair share of revisions and updates since the original open-source files were released. To clear up any ambiguity from the outset, Release 1 was an internal release. Release 2, the first public open-source release (sometimes referred to as the initial release), was in 2018. Release 3 came in 2019 and improved on the mechanical design in many ways. The Deck X innovated on this and provided an integrated circuit board to reduce cables from the headset to just two, (USB 3 / mini-DP), by combining USB devices into a custom-built USB hub + Arduino module. As shown from the table below, the newly released Northstar Next is probably what new users will want to start with, it has an emphasis on modularity and affordability.

3.2

Release 3-2

In Dev.

TBD

Leap Motion

T265

LMC

3.2

3.2

Outdated

03 APR 2019

Leap Motion

T265

LMC

3.1

3.1

Outdated

23 JAN 2019

Leap Motion

T265

LMC

3

3

Release 2

Outdated

6 JUN 2018

Leap Motion

N/A

LMC

1

1

Active

06 JUN 2023

CombineReality

XR50 / T261

SIR 170

BoboVR

Custom

Active

21 AUG 2020

CombineReality

T261

LMC / SIR 170

3.1

A collection of terms and acronyms of varying degrees of ambiguity

Given that Northstar is a project that combines many different fields of research and expertise, it's unlikely that any one person will ever know or be able to recall all the terms, acronyms and technical jargon that is used to discuss these various industries. This glossary intends to define some of the more particular terms, starting with the field of electrical engineering, but will eventually grow to cover other topics in more depth, like firmware, software etc. This page is open to pull requests, please feel free to submit PRs with more terms!

XR is an umbrella term for Virtual Reality, Augmented Reality and Mixed Reality. Some say it stands for Extended Reality, others say the X is a variable, check twitter if you want to see people argue about it for literal years. In general, XR is the term the northstar community uses when discussing VR/AR or MR. For more general discussions we tend to enjoy using "Spatial Computing".

A Combiner is an Optical component that takes rays of light and focuses them to a single end point.

So, DOF is a fun one because there are two meanings for the acronym. In traditional camera work it means Depth of Field. For Northstar and other XR devices it means "Degrees of Freedom". In a 6-DOF system you can rotate on 3 Axis and also move on 3-axis.

IPD stands for Interpupilary distance. This is the term for the distance between your pupils, which is useful information when correcting for optical distortion.

The Eye Box is the area in which the image from a headset is clearly visible. Northstar has a fairly large Eye Box meaning you can adjust the headset to be more comfortable without worrying too much about whether or not you've got it positioned just right.

FOV stands for field of view, this is usually referring to how much you can see through a headset, and can also be used to describe the amount a camera can see. FOV can be measured horizontally, vertically or diagonally.

Latency is an overall term for how long a signal takes to get from one location to another, this is applicable to many areas of the northstar project. For example there is latency from when an image is rendered by the computer to when it is displayed on the northstar screen, or latency from when the image from a tracking camera makes its way to the onboard processor. In short, latency is time, and the longer something takes the more latency it has.

VAC stands for the Vergence Accomodation Conflict. This term describes the difficulty our eyes have in focusing on objects that optically appear a certain distance away and via stereo disparity appear a different distance away.

DP stands for Display Port. This is the display signal type that northstar uses. In general, converting from DP to HDMI is easy, converting from HDMI to DP is incredibly difficult and rarely works, in our experience it is a waste of time trying to look into HDMI to DP adapters.

DP alt mode is a specific subsection of the USB Type C spec that allows it to carry a display port signal.

PD stands for power delivery. This is another subsection of the USB specification that specifies a USB port has the neccessary components to supply more power than a standard port.

PCB stands for Printer Circuit Board. These are typically sheets of multi-layered copper that contain traces which allow electrical signals to be transmitted on the board. These signals can include power and data.

FPC stands for Flexible Printed Circuit, these are similar to PCBs, but the main difference is that these are flexible and can bend. These cables are used to connect the displays and other components and are often used in board to board connections when PCBs may be located in different locations that a rigid connection isn't suited for.

MCU stands for Micro-controller. Microcontrollers are little self-contained computers in a chip that execute programs called firmware. These chips are on PCBs, or printed circuit boards. The programs control various peripherals that are either built into the chip or connected externally. Popular examples of Micro-controllers include and Rasberry Pi.

MIPI is a general industry group and standard for display signal interfaces and camera signal interfaces, among others. For Northstar's case we care mostly about display and camera signals. You can read more about MIPI .

HID stands for Human Interface Device, it a set of standards used to connecting peripherals like keyboards, mice etc.. intended for 'driverless' operation. You can read about the specification , in addition Microsoft have a great resource on HID .

I2C stands for Inter-Integrated Circuit, It is widely used for attaching lower-speed peripheral to processors and in short-distance, intra-board communication.

The Serial Peripheral Interface (SPI) is a synchronous serial communication interface specification used for short-distance communication, primarily in embedded systems. The interface was developed by Motorola in the mid-1980s and has become a de facto standard. Typical applications include Secure Digital cards and liquid crystal displays.You can learn more about SPI .

A DP to MIPI bridge is a specific chip designed to convert signal from a DP input to a MIPI signal to be read by displays.

Git is a common type of Source Control, Allowing developers to maintain a history of their code which is incredibly helpful in diagnosing and figuring out undesired results of changes to that code. You can learn more about Git here:

A Pull Request is a term used to describe the act of submitting a piece of code for review and merging into the main code base. You can learn more about pull requests

A runtime is an intermediary process between end user applications and hardware. In Northstar's case runtimes can include , Monado and .

An OpenXR runtime is software which implements the OpenXR API. There may be more than one OpenXR runtime installed on a system, but only one runtime can be active at any given time.

OpenXR is an API (Application Programming Interface) for XR applications. XR refers to a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality (VR), augmented reality (AR) and mixed reality (MR). OpenXR is the interface between an application and a runtime. The runtime may handle such functionality as frame composition, peripheral management, and raw tracking information.

SLAM is a term that originated in robotics meaning Simulatenous Localization and Mapping. It's become the generic term for systems that can see their environment, localize themselves to a previously known location, and generate a map of that environment for other devices to localize against.

VIO stands for Visual Intertial Odometry, VIO is an important part of the processing needed for SLAM, but does not provide localization or mapping. VIO is soley performing the act of combing visual data with inertial measurements from an .

IMU stands for Inertial Measurement Unit, it is a device that can read out vectors like acceleration, and gravity.In Northstar's case these devices are used for 3DOF movement and can help with and

While this page is under construction, check out the working drawings here:

All notable Mechanical changes will be documented in this file.

BOM for Update 3.1 Assm

Cable guides (#113-002, #114-002)

Simplified folder structure

Updated BOM with links

Exported updated CAD STEP files of assemblies

Associated part numbers with simplified assm (#130-003, #130-004, #130-005, #130-006)

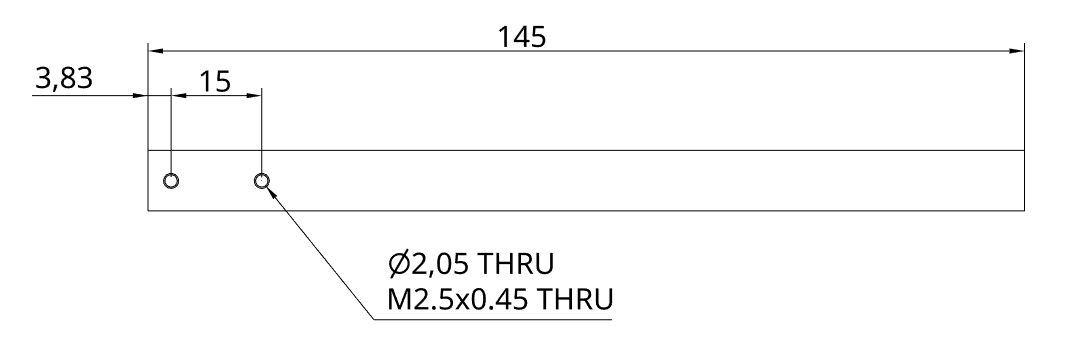

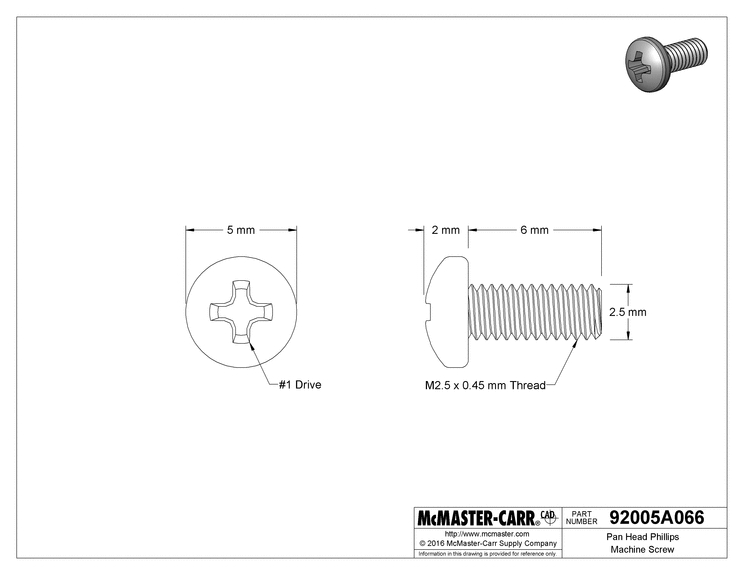

New endcap with M2.5 screw mount (#110-005, #110-006)

Cutout size and shape for al. bar slides (#110-003, #230-002, #240-002)

Modular lid and Vive tracker mount (#130-002, 3, 4, 5, and 6)

Blank mounting plate STEP file

New slide end cap with cable mount (#110-003)

Chamfer on optics bracket display screws, removed lip around rubber washer (#130-001 and #110-001)

Added simplified optics assembly (#130-000)

This page is intended to be specifically about the purchasable kits, there will be some overlap with general FAQ. Check the sidebar on the right to jump to the correct question

Thickened and fillet corners for strength (#230-001,#240-001)

Keyed mates for faster assembly (#220-001,#220-002,#210-003,#210-004)

Thickened for strength, offset edges, removed old cable guide holes (#130-005, #130-006)

Added L+R labels to headgear for easier assembly (#230-000, #240-000)

Added corresponding screwholes for cable guides (#113-001, #114-001)

Kit One contains all the electronics and mechanical parts, along with the 3D printed parts. You'll still need to assemble and calibrate the headset.

Kit Two contains the headset fully assembled and pre-calibrated.

NOTE: All kits have 3m cables. All Kits contains one Ultraleap Stereo IR170. Although the RealSense T261 isn’t part of the kits, the kits still provide all parts to assemble the sensor. There are still some stores that are selling this particular sensor.

The kits come with a full screwdriver kit, and all the screws you'll need to build the headset, aside from a soldering iron. A soldering iron is used for heating up the heat-threaded inserts and can be found at any local electronics store for around $10 USD.

These assets are dependent on Release 4.4.0 of the Leap Motion Unity Modules (included in the package).

Make sure your North Star AR Headset is plugged in

In windows display settings make sure the headset is showing at the correct resolution (2880x1600) and is to the right of the main monitor.

Create a new Project in Unity 2018.4 LTS

Import "LeapAR.unitypackage" from the Github Repo

Navigate to LeapMotion/North Star/Scenes/NorthStar.unity

Click on the ARCameraRig game object and look for the WindowOffsetManager component

Here, you can adjust the X and Y Shift that should be applied to the Unity Game View for it to appear on the North Star's display

When you're satisfied with the placement; press "Move Game View To Headset"

With the Game View on the Headset, you should be able to preview your experience in play mode!

Key Code Shortcuts in NorthStar.unity (in the Editor with the Game View in focus and playing)

C to Toggle Visibility of Calibration Bars

We have included a pre-built version of the internal calibration tool. We can make no guarantees about the accuracy of the process in DIY environments; this pipeline is built from multiple stages, each with multiple points of failure. Included in the .zip file are a python script for calibrating the calibration cameras, a checkerboard .pdf to be used with that, and Windows-based Calibrator exe, and a readme describing how to execute the entire process.

Project North Star is an open source augmented reality headset. It was initially released by LeapMotion (now UltraLeap) in 2018 and is currently supported by its open source community.

There have been several iterations of the headset since the project began. The latest build is Northstar Next.

As opposed to Virtual Reality, where the user's view is generated entirely by a computer, Augmented Reality projects a rendered image over the real-world perspective of the user. This gives an effect of the rendered objects existing in the real world.

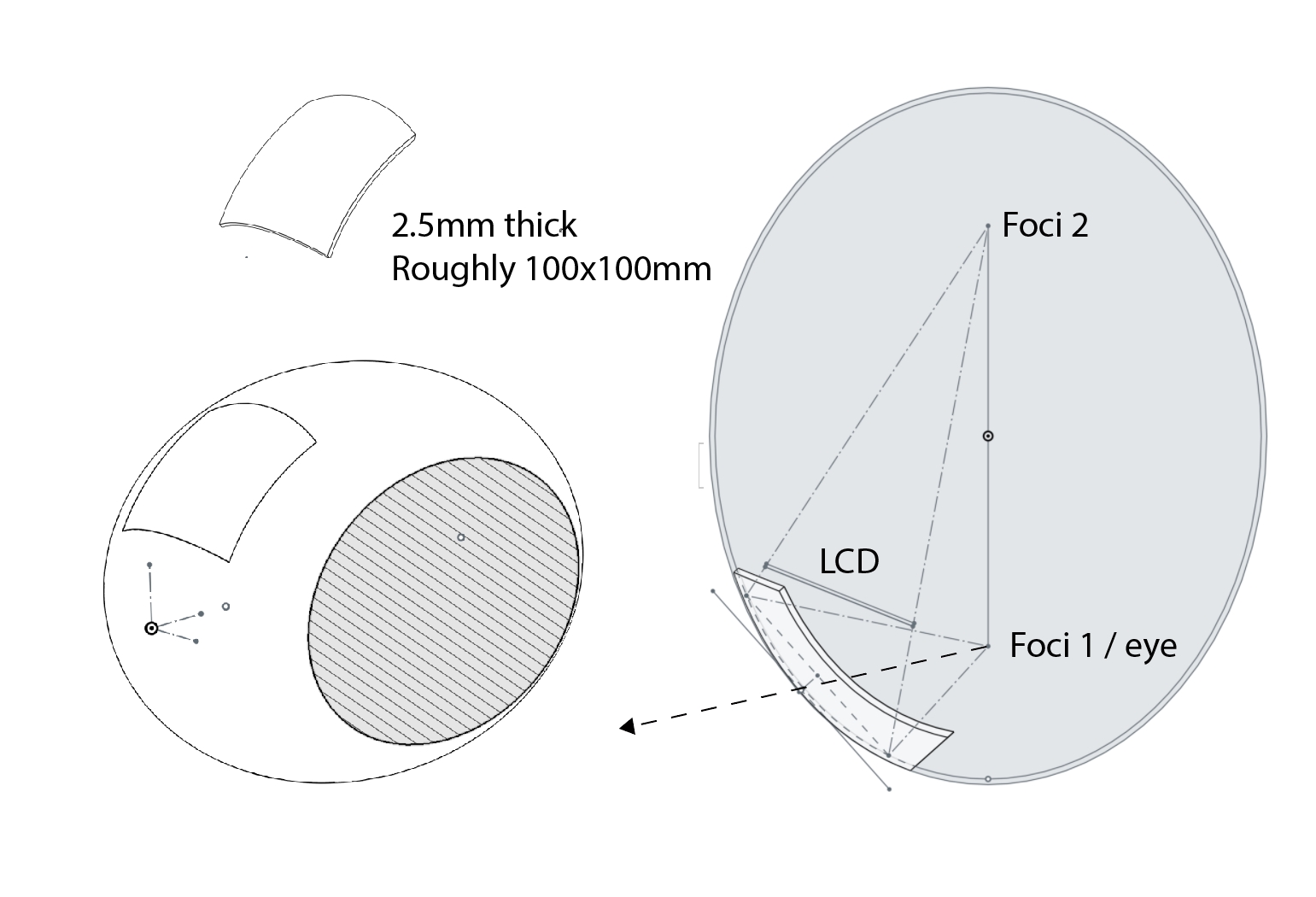

To achieve this effect, an AR headset can use either a "pass-through" or a transparent optical system. A pass-through display streams the outside world to an enclosed LCD/LED screen. A transparent optical system projects an image which is reflected off a lens in front of the user's field of view. Project North Star uses a transparent optical system.

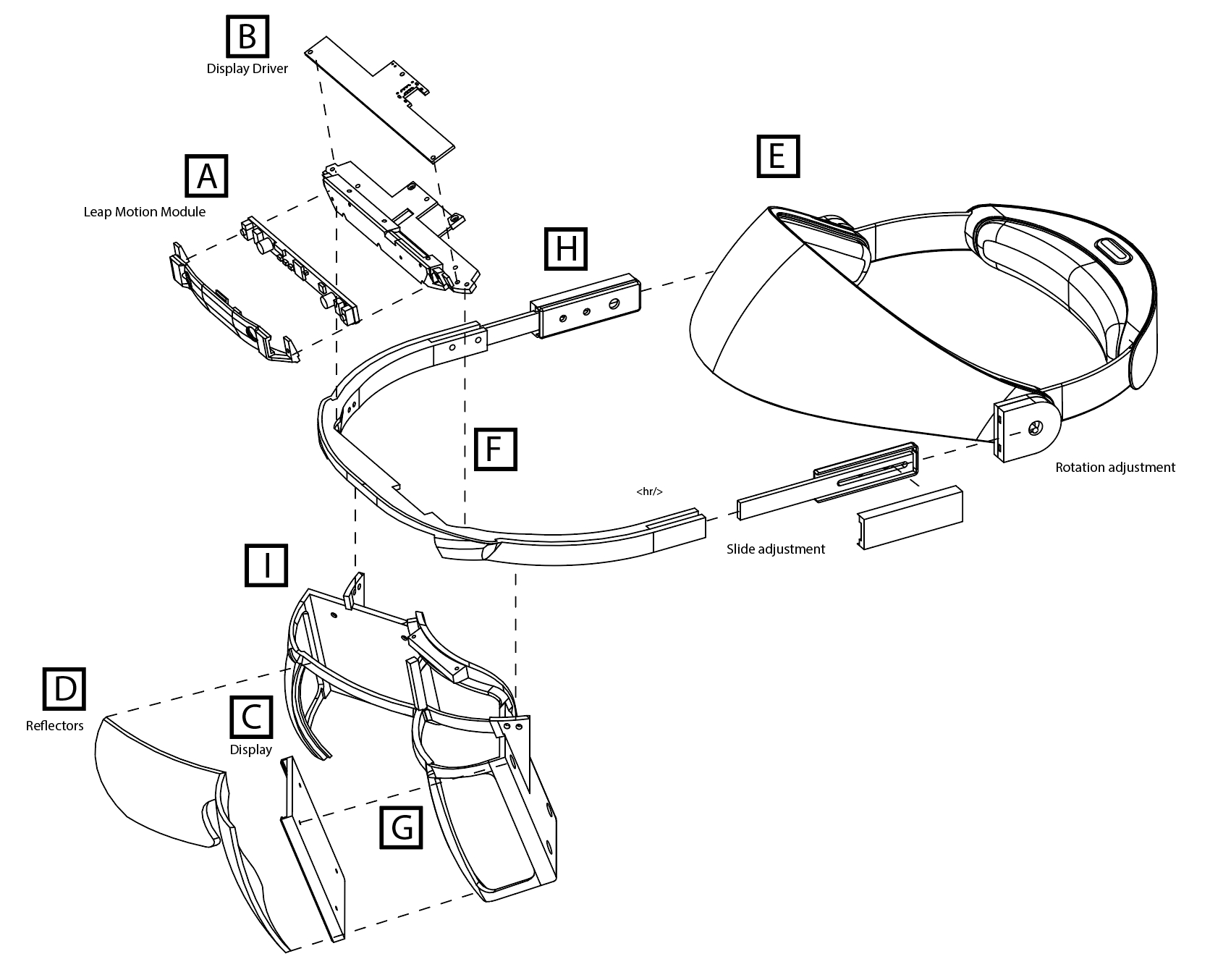

Though an AR headset is a very complex device, we can simplify it by separating its hardware and software into different components, each of which serve a specific function.

Project North Star develops and/or sources the parts for each of these components, and provides instructions to combine them into a complete headset. Each headset version is comprised of different iterations of some or all of these parts.

Each of these components can separated into the following categories:

Display: The hardware that produces the images.

Optics: Lenses which bend light from the image so it is in-focus for the user.

: Hardware and software that track the user's position and orientation through space.

This separation of responsibility allows for different open or closed source solutions to be used in a headset, resulting in a modular design.

The challenges in this space are not limited to technological problems. Few people have the means to fabricate Printed Circuit Boards (PCBs), lenses, and LCD screens. So maintaining Project Northstar also means project contributors find and work with manufacturers and distributors for bespoke electronics. This influences both the design and availability of the headsets.

With an active community, open documentation, and modular design, Project Northstar's headsets are customizable and repairable in a way that no other closed-source headset is. Anyone can contribute to the project. So the more its users customize and upgrade their devices, the more the project benefits as a whole.

The world is full of stereo cameras and sensors, this page will help you choose which one to get for your headset.

The Northstar Next platform is designed to be modular, this means you can swap in or use any tracking camera/platform you'd like! Given how quickly the landscape around tracking changes, and how often new components/parts come out it's important to be able to upgrade electronics instead of having to build a whole new HMD, it's the reuse part of reduce/reuse/recycle!

Below are a few recommendations for various sensors, they all provide different features and have pros/cons so be sure to ask on discord if you have any specific questions!

Generally when it comes to out of the box hand tracking, Ultraleap (formerly leap motion) are the best in the field. They have a variety of sensors you can choose. For Northstar headsets we generally recommend using the SIR170 as it's the lightest available sensor, and is designed for integrating into headsets. However you can also use the 1st or second gen leap motion cameras. The LeapMotion platform currently works on Windows and Linux, with MacOS support coming soon!

Throught the duration of Project Northstar there have been many 6DOF sensors on the market. In this category, we'll show sensors that can be used "out of the box" with minimal configuration.

The Xvisio XR50 sensor is one of the smallest 6DOF tracking sensors available. It uses a mix of onboard and on host compute to calculate pose, and has some other available features like Plane Detection.

These cameras are no longer being manufactured or supported by Intel, and you'd likely have to find them second hand. Note that the last version of the Realsense SDK that supports these sensors is

While these cameras are now in the end of life stage, they do still function and are generally one of the simplest tracking platforms to get started with. The t261 is just the embedded version of the T265, which means it's better suited for use in a weight-sensitive project like this one, but either sensor will function the same. The T26x sensors provide access to a stream of camera poses directly from the device, which means your computer doesn't have to process the poses.

If you want more reliable 6DOF tracking at the cost of portability, you can choose to use a SteamVR Lighthouse based solution. There are a variety of trackers available for this system, the most common being from HTC Vive and Tundra Labs.

Lighthouse based solutions use two external laser sources to track the position of the tracker. The Lighthouse sensors themselves can be acquired via Valve or HTC.

Luxonis cameras are designed for a variety of computer vision uses cases, and can work well for hand tracking, but aren't explicitly designed for the task. This won't be an "out of the box" solution for hand tracking like Ultraleap, but if you're more of a tinkerer, and willing to set up a lot of the software yourself, you can try a camera from Luxonis and use open source hand tracking on Linux platforms.

The Luxonis cameras also sport an onboard movidus AI chip which means you can run other computer vision tasks as well, directly on the sensor itself.

There are many variants of Luxonis cameras to choose from, we generally recommend the Oak-D-W or Oak-D-Pro-W. It is important to get the -W variants, as these are the wide FOV versions.

Pulled from the #helpful-content channel in the North Star Discord

This page has a handful of links that will help you learn more about #ProjectNorthstar and connect with the community, we highly recommend checking out the discord and hanging out with some of the awesome people there! It's the quickest way to get your questions answered.

Joining the #ProjectNorthstar discord server is the best way to get help with any troubles you run into! It's also a fun and friendly community, come hang out!

You can order ready to build kits, or pre-built kits here!

put together an incredible collection of knowledge about design for VR and AR. You can check it out here!

GitHub Build Guide

GitHub Repository

Forums

This medium article by @Tasuku is really good! Check it out for relevant links for Exii, 1-10.inc and other tweaks.

@mdrjjn put together this guide on how to build Exii Version 1, He made a video too!

Here's a link to Psychic Vr Lab's guide on going through the calibration process

@atlee19 put together this cool website with a bunch of Open Source Demos

@eswar made this awesome calibration walkthrough

@eswar also made this tutorial for 6dof tracking using a vive tracker!

Just joining? @callil made this awesome presentation for the New York meetup, it’s a great summary of what has happened so far!

Work In Progress documentation on how to setup

This is the homepage for the Combine Reality Deck-X variant of Northstar.

The Combine Reality Deck X is a variant of Release 3 designed by Noah Zerkin's team at smart-prototyping. It includes a new hub called "The Integrator" which includes microSD card storage, an Arduino and USB hub, an embedded Intel Realsense t261 sensor, and a control board for adjusting ergonomics like IPD and eye relief.

Please note that the Github repo for the CombineReality Deck X headset has three versions. The Prints in the Deck X folder are intended for users who will be assembling, hacking and taking apart their headset multiple times. The inserts are intended to help increase the lifespan of the 3D printed parts by reducing the stress and wear on the parts themselves. This version is not updated as frequently as the version below without heat set inserts. There's also a version of the Deck X for users that don't want to use heat-set inserts. Please note that if you only intend to adjust or rebuild the headset once or twice maximum. Taking the headset apart and putting it back together without heat-set inserts will cause the mounting points to deteriorate over time. The Prints in the 3.1.1 folder are intended for users who want to upgrade their existing 3.1 headset without reprinting the optics bracket.

The Integrator is our custom-built USB hub system originally created for the CombineReality Project North Star Deck X. The Integrator cuts down the use of cables and adds customizable buttons to the headset with the following components & features:

USB-C hub, two USB 3.1 ribbon connectors, and one USB 2.0 ribbon connector 3GB on-board flash drive (only works when connected to a USB 3.0 host) Arduino-compatible microcontroller, featuring a Qwiic connector that can be used to connect additional sensors like an IMU, as well as HID buttons that can emulate keyboard keys.

A button breakout board is included, and the microcontroller is preflashed with firmware that maps the buttons to the default ergonomics adjustment keys. (Eye relief, eye position, and IPD) Also allows for manual power reset of sensor USB ports via a GPIO pin.

A fan, the speed of which is controlled by the Arduino-compatible microcontroller. A thermistor for a more intelligent fan speed control.

The Integrator uses a modified version of the lilyPadUSB-caterina Arduino bootloader Bootloader can be found here:

http://blog.leapmotion.com/north-star-open-source/

At Leap Motion, we envision a future where the physical and virtual worlds blend together into a single magical experience. At the heart of this experience is hand tracking, which unlocks interactions uniquely suited to virtual and augmented reality. To explore the boundaries of interactive design in AR, we created Project North Star, which drove us to push beyond the limitations of existing systems.

Today, we’re excited to share the open source schematics of the North Star headset, along with a short guide on how to build one. By open sourcing the design and putting it into the hands of the hacker community, we hope to accelerate experimentation and discussion around what augmented reality can be. You can download the package from our website or dig into the project on GitHub, where it’s been published under an Apache license.

Our goal is for the reference design to be accessible and inexpensive to build, using off-the-shelf components and 3D-printed parts. At the same time, these are still early days and we’re looking forward to your feedback on this initial release. The mechanical parts and most of the software are ready for primetime, while other areas are less developed. The reflectors and display driver board are custom-made and expensive to produce in single units, but become cost-effective at scale. We’re also exploring how the custom components might be made more accessible to everyone.

The headset features two 120 fps, 1600×1440 displays with a field of view covering over a hundred degrees combined. While the classic Leap Motion Controller’s FOV is significantly beyond existing AR headsets such as Microsoft Hololens and Magic Leap One, it felt limiting on the North Star headset. As a result, we used our next-generation ultra-wide tracking module. These new modules are already being embedded directly into upcoming VR headsets, with AR on the horizon.

Project North Star is very much a work in progress. Over the coming weeks, we’ll continue to post updates to the core release package. Let us know what you think in the comments and . If your company is interested in bringing North Star to the world, email us at .

It’s time to look beyond platforms and form factors, to the core user experience that makes augmented reality the next great computing medium. Let’s build it together.

Release 1 is the first version of the headset which leap motion open sourced in 2018. Since then there have been multiple revisions to make the headset stronger, easier to print, and more comfortable. For a detailed writeup of the changes made in release 3, check out the Release 3 Documentation.

The leap motion was designed to be able to support usb 3.0, however it currently only utilizes usb 2.0. This means you can use a standard usb micro-b connection with the sensor, which is useful for integrating it into smaller form factors, like an hmd.

Yes! The optics bracket will fit, you may have to rotate it a bit to fit properly on the build plate, here's a screenshot for reference. You can see as noted in the image that the dimensions of the optics bracket with a roughly 22 degree offset are (X:224.14mm , Y: 140.51mm, Z: 101.03mm).

This page goes over the general setup you should do after you've finished building and calibrating your headset.

There are currently three methods of developing software on Northstar headsets.

This is the recommended developer experience. Esky is currently built on Unity and has built in support for the MRTK. It has video passthrough with the t261/t265, temporal reprojection, and supports both the 2D and 3D calibration methods.

http://blog.leapmotion.com/northstar/

Leap Motion is a company that has always been focused on human-computer interfaces.

We believe that the fundamental limit in technology is not its size or its cost or its speed, but how we interact with it. These interactions define what we create, how we learn, how we communicate with each other. It would be no stretch of the imagination to say that the way we interact with the world around us is perhaps the very fabric of the human experience.

This version of the calibration system allows you to calibrate your headset with a single stereo camera

Above: A video walking you through the 2D calibration process for northstar.

http://blog.leapmotion.com/project-north-star-mechanical-and-calibration-update-3-1/

The future of open source augmented reality just got easier to build. Since our last major release, we’ve streamlined Project North Star even further, including improvements to the calibration system and a simplified optics assembly that 3D prints in half the time. Thanks to feedback from the developer community, we’ve focused on lower part counts, minimizing support material, and reducing the barriers to entry as much as possible. Here’s what’s new with version 3.1.

As we discussed in our post on the , small variations in the headset’s optical components affect the alignment of the left- and right-eye images. We have to compensate for this in software to produce a convergent image that minimizes eye strain.

http://blog.leapmotion.com/project-north-star-mechanical-update-1/

This morning, we released an update to the North Star headset assembly. The project CAD files now fit the Leap Motion Controller and add support for alternate headgear and torsion spring hinges.

With these incremental additions, we want to broaden the ability to put together a North Star headset of your own. These are still works in progress as we grow more confident with what works and what doesn’t in augmented reality – both in terms of industrial design and core user experience.

Integrator: Hardware and software that allows each component to communicate with each other.

Runtime: Software that acts as an intermediary between user applications and hardware.

Assembly: Hardware that houses all the components to create an complete headset.

These folders contain the Schematic, Firmware, and Bill of Materials used in building and programming the display driver board on the Project North Star AR Headset.

A ribbon connector that lets the Arduino on the hub relay commands and debug output to and from the serial UART of the display driver board Ribbon adapter board for Intel® RealSense™ T261 embedded 6-DOF module (ribbon cable included) Ribbon adapter board for Leap Motion Controller (ribbon cable included)

Unity This is the default unity experience, it's barebones and built for the 3D calibration rig. If you're experienced with unity and want to tinker with the original source code for Northstar this is the place for you.

SteamVR The SteamVR integration allows any SteamVR game to run on a Northstar headset. Hand tracking isn't a replacement for controllers yet so you won't have a fun time in beat saber, but for demos like cat explorer or the infamous cubes demo you'll have full support for hand tracking.

Prebuilt Examples There are a handful of prebuilt demos for Northstar including LeapPaint, Galaxies and others. These will be linked on a separate page/database at a future date, for now, join the discord and check the #showcase channel.

You'll want to make sure that your headset works properly, plug in the power to the integrator board or driver board first, then the display port connection. On some headsets there can be an issue where plugging the display in first causes the driver board to get enough power through the display port connection to boot, but not enough power to run properly. If you run into this issue unplug your headset and then plug-in power first, then the display connection.

Your Northstar display will show up as a normal monitor and will look "upside-down" if viewed through the headset. This is normal, we compensate for this in software written for the headset. Make sure your headset is set up so it's to the right of your main display(s), and that the resolution is 2880x1600. You'll also want to make sure that the scale and layout section is set to 100%. By default, the headset will run at 90hz. You'll also want to ensure that your headset is set to extended mode and not mirrored.

There are a handful of demos that require leap motion's multi-device beta driver, located here. If you're having issues with getting your leap working in demos or unity this is probably the reason. Once you have the drivers installed open the leap motion control panel and make sure that the connection is "good", as shown in the figure below.

In order to use your T261 / T265 sensor you'll need to install the RealSense SDK, located here (be sure to install v2.53.1, as this is the last version that supports the RealSense Tracking range.). Once you are finished installing the SDK, open the RealSense viewer application to ensure that your RealSense device is connected. If you are using the Deck X headset and your T261 is not showing up you can take the following actions to resolve it:

Push down on both circular buttons on the headset for four seconds to power cycle the integrator board. This should cause the RealSense device to enumerate and cause windows to detect it.

If step 1 did not work you can try unplugging the headset and plugging it back in, make sure your USB connection is plugged into a USB 3.1 port.

If both of the above steps did not work you can try resetting the USB hub in device manager. This solution has solved most edge cases we've seen so far.

There are currently three methods of getting software running on North Star headsets.

Esky This is the recommended developer experience. It has video passthrough with the T261 / T265, built-in support for the Mixed Reality Tool Kit and support for both 2D and 3D calibration methods.

Unity This is the default unity experience, it's bare-bones and built for the 3D calibration rig. If you're experienced with unity and want to tinker with the original source code for North Star this is the place for you.

SteamVR The SteamVRr integration allows any SteamVR game to run on a Northstar headset. Hand tracking isn't a replacement for controllers yet so you won't have a fun time in beat saber, but for demos like cat explorer or the infamous cubes demo you'll have full support for hand tracking.

Prebuilt Examples There are a handful of pre-built demos for North Star including LeapPaint, Galaxies and others. These will be linked on a separate page/database at a future date, for now, and check the #showcase channel

The coming of virtual reality has signaled a great moment in the history of our civilization. We have found in ourselves the ability to break down the very substrate of reality and create ones anew, entirely of our own design and of our own imaginations.

As we explore this newfound ability, it becomes increasingly clear that this power will not be limited to some ‘virtual world’ separate from our own. It will spill out like a great flood, uniting what has been held apart for so long: our digital and physical realities.

In preparation for the coming flood, we at Leap Motion have built a ship, and we call it Project North Star.

North Star is a full augmented reality platform that allows us to chart and sail the waters of a new world, where the digital and physical substrates exist as a single fluid experience.

The first step of this endeavor was to create a system with the technical specifications of a pair of augmented glasses from the future. This meant our prototype had to far exceed the state of the art in resolution, field-of-view, and framerate.

Borrowing components from the next generation of VR systems, we created an AR headset with two low-persistence 1600×1440 displays pushing 120 frames per second with an expansive visual field over 100 degrees. Coupled with our world-class 180° hand tracking sensor, we realized that we had a system unlike anything anyone had seen before.

All of this was possible while keeping the design of the North Star headset fundamentally simple – under one hundred dollars to produce at scale. So although this is an experimental platform right now, we expect that the design itself will spawn further endeavors that will become available to the rest of the world.

To this end, next week we will make the hardware and related software open source. The discoveries from these early endeavors should be available and accessible to everyone.

We’ve got a long way to go still, so let’s go together.

We hope that these designs will inspire a new generation of experimental AR systems that will shift the conversation from what an AR system should look like, to what an AR experience should feel like.

Over the past month we’ve hinted at some of the characteristics of this platform, with videos on Twitter that have hit the front page of Reddit and collected millions of views from people around the world.

Over the next few weeks we will be releasing blog posts and videos charting our discoveries and reflections in the hope that this will create an evolving and escalating conversation around the nature of this new world we’re heading towards.

We’re going to take a bit of time to talk about the hardware itself, but it’s important to understand that, at the end of the day, it’s the experience that matters most. This platform lets us forget the limitations of today’s systems; it lets us focus on the experience, the software and the interface, which is the core of what Leap Motion is about.

The journey towards the hardware of a perfect AR headset is not complete and will not be for some time, but Project North Star gives us perhaps the first glimpse that we’ve ever had. It helps us ask the right questions, find the right answers and start to chart the course to a future we all want to live in, where technology empowers humanity to solve the problems of today and those to come.

Download the realsense-integration branch of the following repo: https://github.com/BryanChrisBrown/ProjectNorthStar/tree/realsense-integration

Setup python with the following dependencies:

1) Pyrealsense2

2) opencv-contrib-python

3) numpy

Print the calibration stand and intel realsense t265 mount m3x12mm screws to mount the t265 itself.

If using a Deck X and t261 mount, use the instead, the screws are included in your Deck X Kit.

Run through the following steps to calibrate your headset.

captureGraycodes.py - run python captureGraycodes.py to run this script

Ensure that your headset is placed on the calibration stand, with the stand's camera looking through it where the users' eyes will be.

On line 44 there is a line of code that changes the window offset for the graycode generator.

This example here moves the window 1920 pixels to the right, and 0 up and down. You'll want the X value (1920) to be your main monitor's width in pixels. cv2.moveWindow ("Graycode Viewport", 1920, 0)

It helps to place a piece of cloth over the rig to shield the cameras + headset from ambient light.

The sequence of binary codes will culminate in a 0-1 UV mapping, saved to "./WidthCalibration.png" "./HeightCalibration.png" in your main folder.

calibrateGraycodes.py

Running this script will fit a 3rd-Degree 2D Polynomial to the left and right "eye"'s X and Y distortions.

This polynomial will map from each display's 0-1 UV Coordinates to rectilinear coordinates (where a 3D ray direction is just (x, y, 1.0)).

When you are finished, you may paste the output of the calibrateGraycodes.py into this diagnostic shadertoy to check for alignment. Additionally, ensure that your headset is plugged in and displaying imagery from your desktop. Running this script will display a sequence of gray codes on your North Star, capturing them at the same time.

Additionally, there should be a NorthStarCalibration.json in this directory which you may use in the Unity implementation.

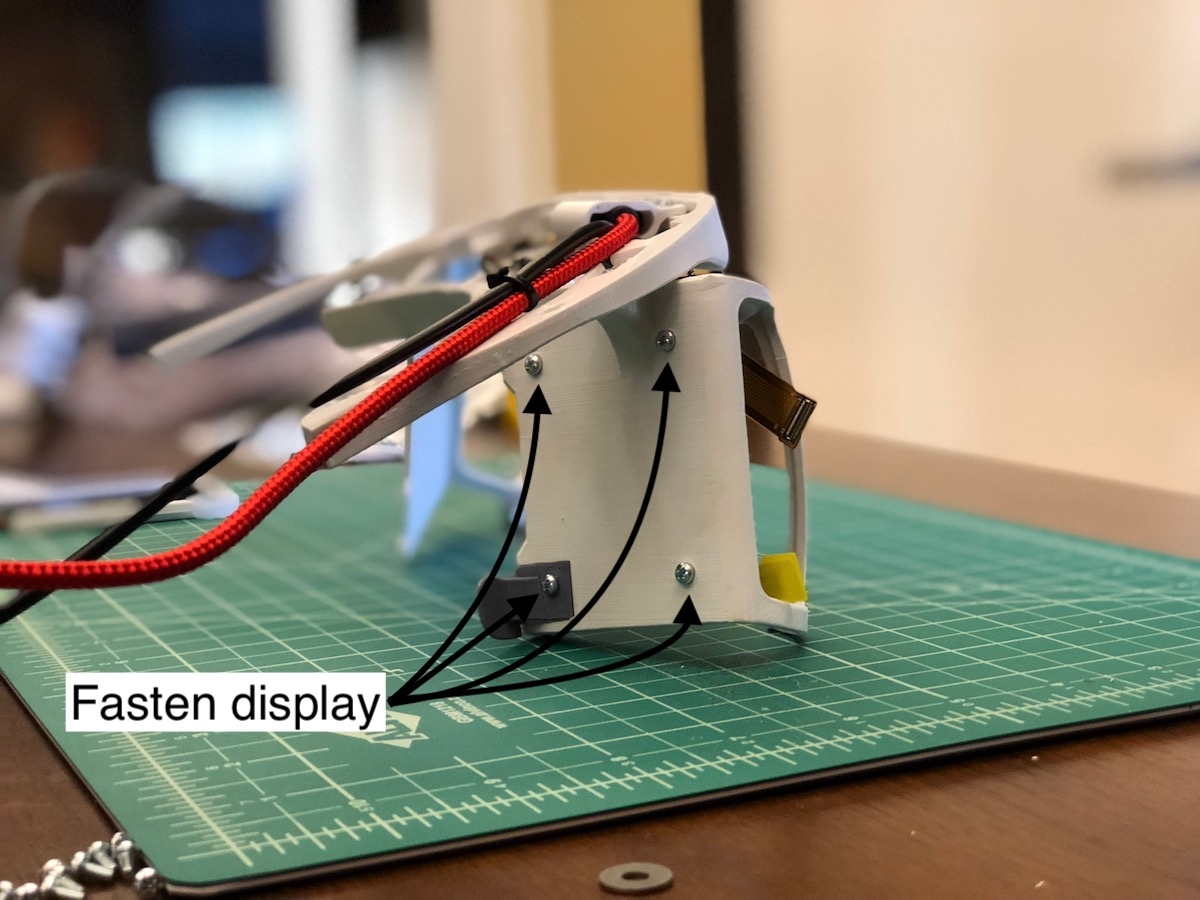

Building on feedback from the developer community, we’ve made the assembly easier and faster to put together. Mechanical Update 3.1 introduces a simplified optics assembly, designated #130-000, that cuts print time in half (as well as being much sturdier).

The biggest cut in print time comes from the fact that we no longer need support material on the lateral overhangs. In addition, two parts were combined into one. This compounding effect saves an entire workday’s worth of print time!

Left: 1 part, 95g, 7 hours, no supports. Right: 2 parts, 87g, 15 hour print, supports needed.

The new assembly, #130-000, is backwards compatible with Release 3. Its components substitute #110-000 and #120-000, the optics assembly, and electronics module respectively. Check out the assembly drawings in the GitHub repo for the four parts you need!

Last but not least, we’ve made a small cutout for the power pins on the driver board mount. When we received our NOA Labs driver board, we quickly noticed the interference and made the change to all the assemblies.

This change makes it easy if you’re using pins or soldered wires, either on the top or bottom.

Want to stay in the loop on the latest North Star updates? Join the discussion on Discord!

This alternate 3D printed bracket is a drop-in replacement for Project North Star. Since parts had to move to fit the Leap Motion Controller at the same origin point, we took the opportunity to cover the display driver board and thicken certain areas. Overall these updates make the assembly stiffer and more rugged to handle.

When we first started developing North Star prototypes, we used 3M’s Speedglas Utility headgear. At the time, the optics would bounce around, causing the reflected image to shake wildly as we moved our heads. We minimized this by switching to the stiffer Miller headgear and continued other improvements for several months.

However, the 3M headgear was sorely missed, as it was simple to put on and less intimidating for demos. Since then we added cheek alignment features, which solved the image bounce. As a result we’ve brought back the earlier design as a supplement to the release headgear. The headgear and optics are interchangeable – only the hinges need to match the headgear. Hopefully this enables more choices in building North Star prototypes.

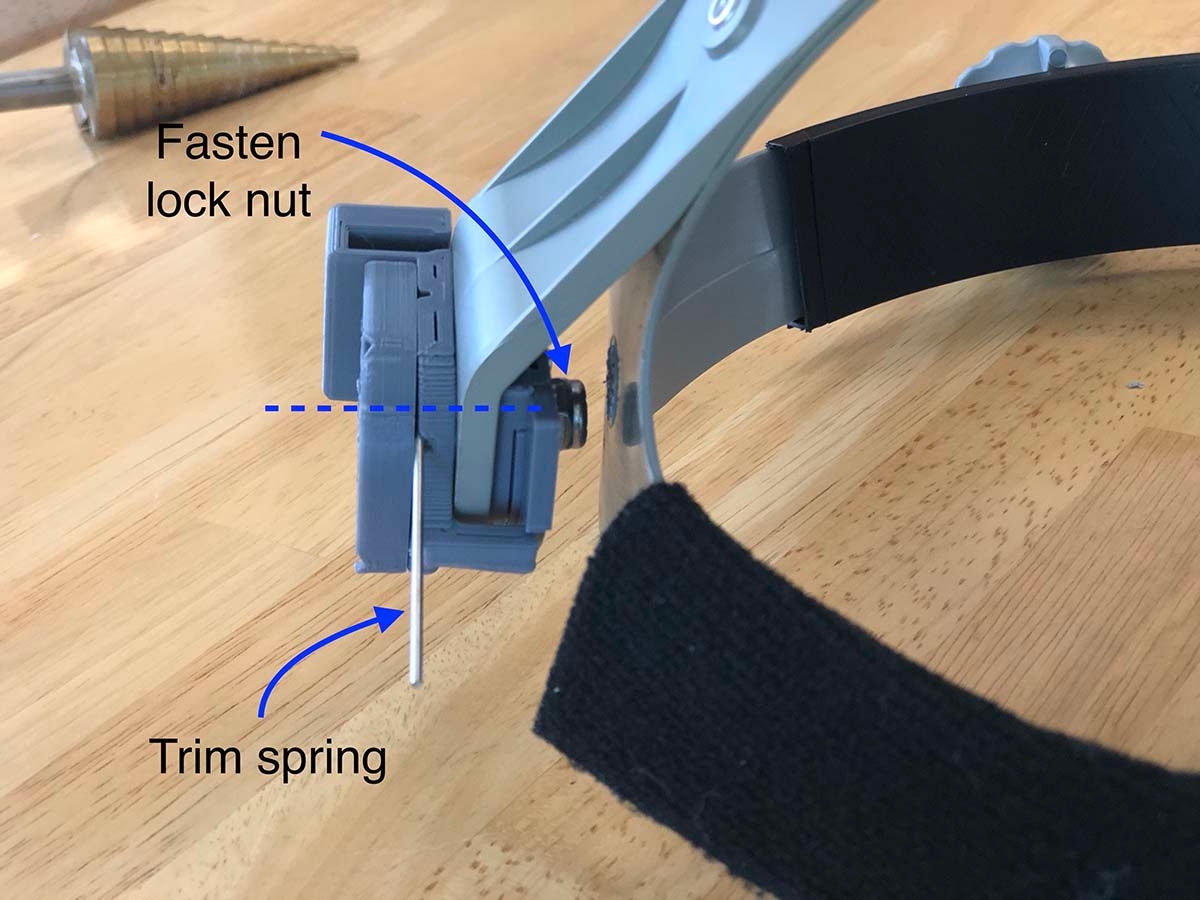

One of the best features of the old headgear was torsion hinges, which we’ve introduced with the latest release. Torsion hinges lighten the clamping load needed to keep the optics from pressing on users’ faces. (Think of a heavy VR HMD – the weight resting on the nose becomes uncomfortable quickly.)

Two torsion springs constantly apply twisting force on the aluminum legs, fighting gravity acting on the optics. The end result is the user can acutely suspend the optics above the nose, and even completely flip up the optics with little effort. After dusting off the original hinge prototypes, rotation limits and other simple modifications were made (e.g. using the same screws as the rest of the assembly). Check out the build guide for details.

We can’t wait to share more of our progress in the upcoming weeks – gathering feedback, making improvements, and seeing what you’ve been doing with Leap Motion and AR. The community’s progress so far has been inspirational. Given the barriers to producing reflectors, we’re currently exploring automating the calibration process as well as a few DIY low-cost reflector options.

You can catch up on the updated parts on the Project North Star documentation page, and print the latest files from our GitHub project. Come back soon for the latest updates!

sh

sudo apt-key adv --keyserver keys.gnupg.net --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE

sudo add-apt-repository "deb https://librealsense.intel.com/Debian/apt-repo focal main" -u

sudo apt-get install librealsense2-dkms librealsense2-utils librealsense2-dev librealsense2-dbgsh

git clone https://gitlab.freedesktop.org/monado/monado.git

cd monado

meson build

sudo ninja -C build install -j24Welcome to the next generation of open source spatial computing.

Northstar Next is a new Single-cable (USB Type-C) Project North Star variant kit, cost-optimized with an example of lightweight and modular headset design. The use of a single cable and its modularity are the biggest differences with the previous version. The individual parts are as follows:

Thinner, lighter, more easily mountable! Still has the massive FoV of Leap Motion’s original optics design.

USB-C with DisplayPort Alt-Mode (Not all USB-C host ports support DisplayPort; please make sure in advance that your intended host device has at least one that does.)

Using same 3.5” 1440 x 1600 VS035ZSM-NW0-69P0 displays from BOE (Grade B*)

LT7911D display controller (good relationship with manufacturer, good support, and a stable supply)

The D variant of the LT7911 series has two MIPI outputs, while together the displays have four. We’re pushing the limit of what a single 4-channel MIPI port can handle, with the benefit of using 25-pin ribbon cables with only half the pins of their predecessors.

Connects to display driver board and doesn’t require any additional external cables

4-port USB 2 High Speed Hub with FE1.1 controller IC.

1A integrated fuse with 1.5A cutoff

3x 6-pin ribbon connectors for USB 2

Driver board: Breakout Audio ports and Brightness controlling pins

Adapters, the kit includes several USB adapters so that you can connect it to a variety of different sensors that are in use in the North Star community: - USB-C male connector supporting USB 2 High Speed, with both 6-pin and 12-pin ribbon connectors for direct connection to the Display Driver board or to one of the 6-pin connectors on the PNS Hub board. - MicroUSB male connector with upstream 6-pin connector; similar to and compatible with the MicroUSB adapter included with our older kits. Works with PNS Hub board. - Ultraleap SIR170 / Rigel adapter with upstream 6-pin connector for use with our PNS Hub board. - T261 USB2 High Speed adapter with 6-pin connector. (Note, with USB2 bandwidth, you won’t be able to stream video from the T261 when used concurrently with an Ultraleap or Leap Motion hand-tracking module. You can, however, get a low-latency stream of the 6DOF tracking data generated onboard the device.) The Realsense T261 has been discontinued by Intel, but can still be found. We include this adapter mainly for the convenience of those North Star builders who already have this module.

Easily removable and swappable core electronics bay and sensor pods

Snap-together / snap-apart design

Input Interface: USB-C DisplayPort Alt-Mode (requires host port compatibility; a full-featured USB-C 3.2, Thunderbolt 3 or better, or spec-compliant USB 4 are all safe bets)

Displaying Resolution: 2880x1600 pixels (2x 1600x1440 Displays)

Frame Rate: 85-90Hz

Support Brightness control by adjusting digital potentiometer (

This page will walk you through installing and running the steamVR driver. It is currently supported on windows, with linux support in progress. A Launcher/Installer is also in progress.

This steamVR driver is still a work in progress, if you run into any issues, please reach out on Discord.

- Head Tracking - Hand Tracking - View Projection - Skeletal tracking - Basic input - T265 Sensor integration

- Gesture recognizer

Versions of vendor libraries not included, here is where to get them:

You will need to install the leap motion multi-device drivers in order for this driver to work.

If using the structure core you will need the CrossPlatform SDK 0.7.1 and the Perception Engine 0.7.1

If using the intel realsense t265, you should install the

We have a prebuilt version of the driver available . You can place it in the following directory, or use vrpathreg as shown below.

In order to build from source you will need to install with c++, .net and C++ modules v142. In addition you'll also need to install and .

All commands below are run in windows command prompt

In the folder in which you want the repo to exist, run the following commands:

- Open the generated solution and set northstar to the startup project (right click the project and choose the set as startup where the gear icon is) and build.

Make sure to target x64 and a Release build to remove any object creation slowness.

- The release will be in `build/Release/`and will be comprised of dll files.

- Copy all the dll's to wherever you want to install from, they should be combined into the `resources/northstar/bin/win64` directory, make this if it does not exist and put all generated dll's inside.

- Next register the driver with steamVR (using vrpathreg tool found in SteamVR bin folder). This is located at

C:\Program Files (x86)\Steam\steamapps\common\SteamVR\bin\win64\vrpathreg.exe

vrpathreg is a command line tool, you have to run it via the command prompt. To do this, follow these steps.

1) open command prompt

2) run cd C:\Program Files (x86)\Steam\steamapps\common\SteamVR\bin\win64\

3) run vrpathreg adddriver <full_path_to>/resources/northstar

4) you can verify the driver has been added by typing vrpathreg in command prompt, it will show you a list of drivers installed.

- at this point vrpathreg should show it registered under "external drivers", to disable it you can either disable in StamVR developer options under startup and manage addons, or by using vrpathreg removedriver <full_path_to>/resources/northstar

- Running steamvr with the northstar connected (and no other hmd's connected) should work at this point but probably not have the right configuration for your hmd. Take the .json file from calibrating your nothstar and convert it to the format found inresources/northstar/resources/settings/default.vrsettings

- restart steamvr. If driver is taking a long time to start, chances are it got built in debug mode, Release mode optimizes a way a bunch of intermediate object creation during the lens distortion solve that happens at startup. If things are still going south please issue a bug report here:

- if you wish to remove controller emulation, disable the leap driver in SteamVR developer settings.

http://blog.leapmotion.com/bending-reality-north-stars-calibration-system/

Bringing new worlds to life doesn’t end with bleeding-edge software – it’s also a battle with the laws of physics. Project North Star is a compelling glimpse into the future of AR interaction and an exciting engineering challenge, with wide-FOV displays and optics that demanded a whole new calibration and distortion system.

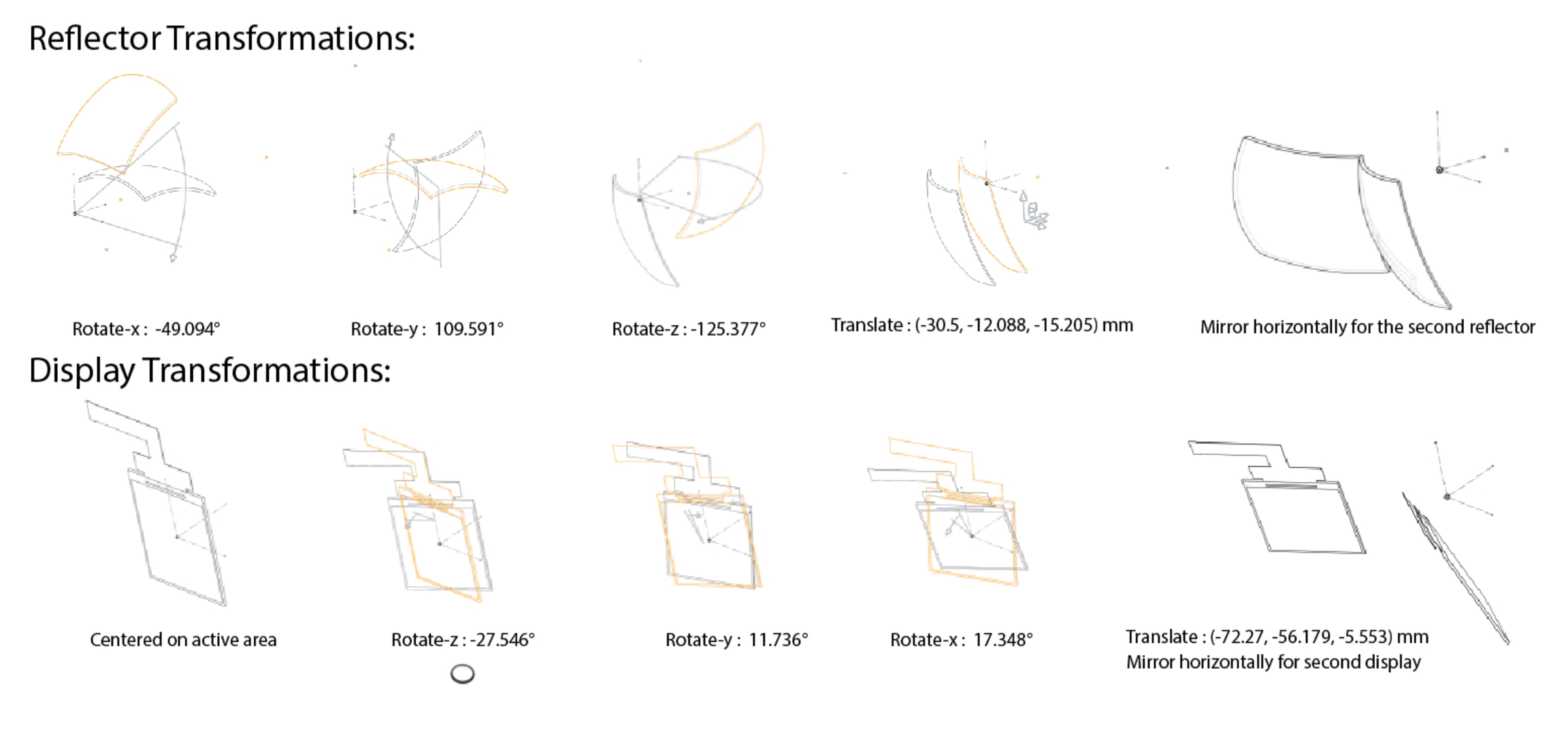

Just as a quick primer: the North Star headset has two screens on either side. These screens face towards the reflectors in front of the wearer. As their name suggests, the reflectors reflect the light coming from the screens, and into the wearer’s eyes.

As you can imagine, this requires a high degree of calibration and alignment, especially in AR. In VR, our brains often gloss over mismatches in time and space, because we have nothing to visually compare them to. In AR, we can see the virtual and real worlds simultaneously – an unforgiving standard that requires a high degree of accuracy.

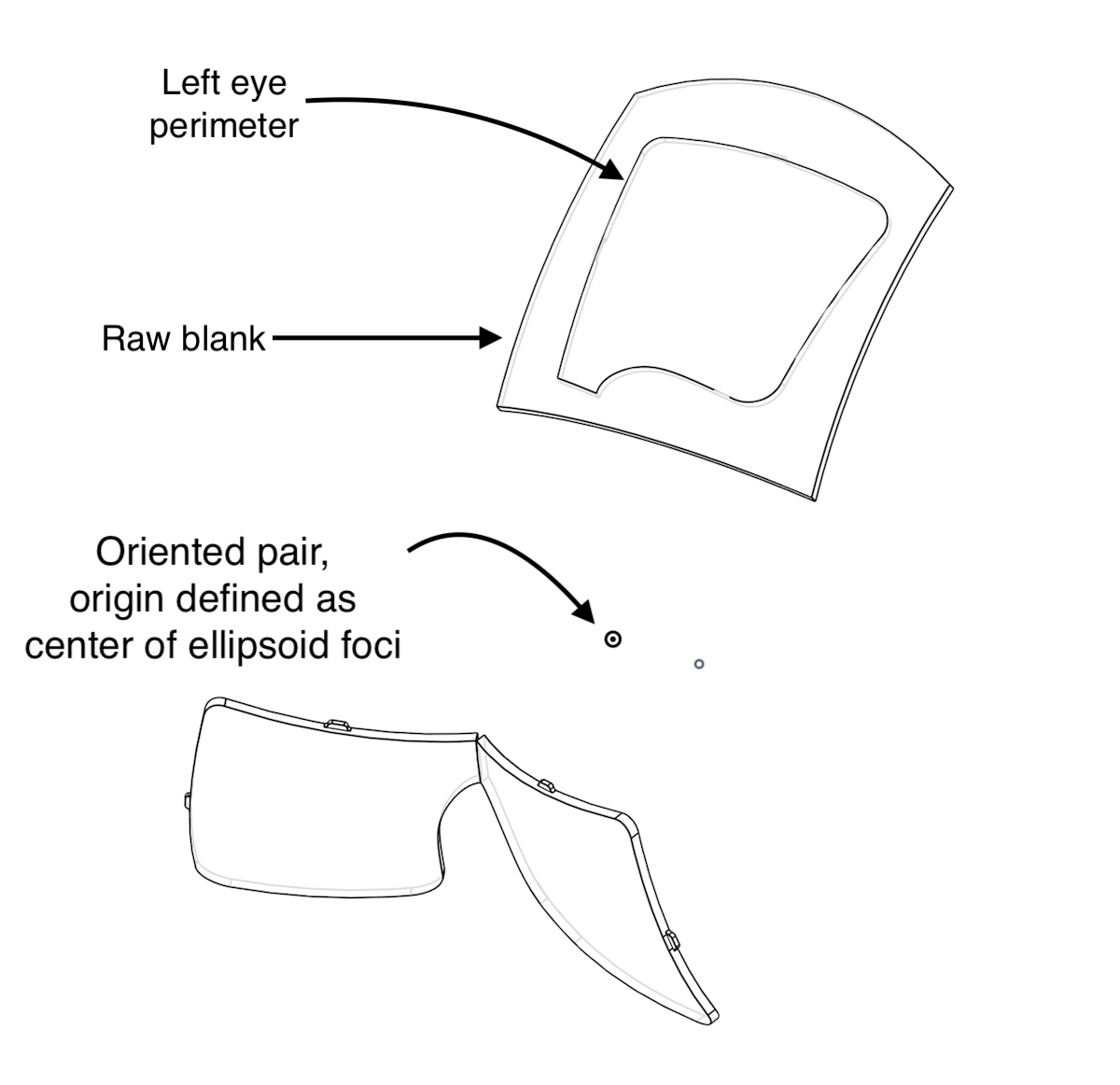

North Star sets an even higher bar for accuracy and performance, since it must be maintained across a much wider field of view than any previous AR headset. To top it all off, North Star’s optics create a stereo-divergent off-axis distortion that can’t be modelled accurately with conventional radial polynomials.

North Star sets a high bar for accuracy and performance, since it must be maintained across a much wider field of view than any previous augmented reality headset. How can we achieve this high standard? Only with a distortion model that faithfully represents the physical geometry of the optical system. The best way to model any optical system is by raytracing – the process of tracing the path rays of light travel from the light source, through the optical system, to the eye. Raytracing makes it possible to simulate where a given ray of light entering the eye came from on the display, so we can precisely map the distortion between the eye and the screen.

But wait! This only works properly if we know the geometry of the optical system. This is hard with modern small-scale prototyping techniques, which achieve price effectiveness at the cost of poor mechanical tolerancing (relative to the requirements of near-eye optical systems). In developing North Star, we needed a way to measure these mechanical deviations to create a valid distortion mapping.

One of the best ways to understand an optical system is… looking through it!. By comparing what we see against some real-world reference, we can measure the aggregate deviation of the components in the system. A special class of algorithms called “numerical optimizers” lets us solve for the configuration of optical components that minimizes the distortion mismatch between the real-world reference and the virtual image.

Leap Motion North Star calibration combines a foundational principle of Newtonian optics with virtual jiggling. For convenience, we found it was possible to construct our calibration system entirely in the same base 3D environment that handles optical raytracing and 3D rendering. We begin by setting up one of our newer 64mm camera modules inside the headset and pointing it towards a large flat-screen LCD monitor. A pattern on the monitor lets us to triangulate its position and orientation relative to the headset rig.

With this, we can render an inverted virtual monitor on the headset in the same position as the real monitor in the world. If the two versions of the monitor matched up perfectly, they would additively cancel out to uniform white. (Thanks Newton!) The module can now measure this “deviation from perfect white” as the distortion error caused by the mechanical discrepancy between the physical optical system and the CAD model the raytracer is based on.

This “one-shot” photometric cost metric allows for a speedy enough evaluation to run a gradient-less simplex Nelder-Mead optimizer in-the-loop. (Basically, it jiggles the optical elements around until the deviation is below an acceptable level.) While this might sound inefficient, in practice it lets us converge on the correct configuration with a very high degree of precision.

This might be where the story ends – but there are two subtle ways that the optimizer can reach a wrong conclusion. The first kind of local minima rarely arises in practice. The more devious kind comes from the fact that there are multiple optical configurations that can yield the same geometric distortion when viewed from a single perspective. The equally devious solution is to film each eye’s optics from two cameras simultaneously. This lets us solve for a truly accurate optical system for each headset that can be raytraced from any perspective.

In static optical systems, it usually isn’t worth going through the trouble of determining per-headset optical models for distortion correction. However, near-eye displays are anything but static. Eye positions change for lots of reasons – different people’s interpupillary distances (IPDs), headset ergonomics, even the gradual shift of the headset on the head over a session. Any one of these factors alone can hamper the illusion of augmented reality.

Fortunately, by combining the raytracing model with eye tracking, we can compensate for these inconsistencies in real-time for free![6] We’ll cover the North Star eye tracking experiments in a future blog post.

http://blog.leapmotion.com/project-north-star-mechanical-update-3/

Today we’re excited to share the latest major design update for the Leap Motion North Star headset. North Star Release 3 consolidates several months of research and insight into a new set of 3D files and drawings. Our goal with this release is to make Project North Star more inviting, less hacked together, and more reliable. The design includes more adjustments and mechanisms for a greater variety of head and facial geometries – lighter, more balanced, stiffer, and more inclusive.

With each design improvement and new prototype, we’ve been guided by the experiences of our test participants. One of our biggest challenges was the facial interface, providing stability without getting in the way of emoting.

Now, the headset only touches the user’s forehead, and optics simply “float” in in front of you. The breakthrough was allowing the headgear and optics to self-align between face and forehead independently. As a bonus, for the first time, it’s usable with glasses!

Release 3 has a lot packed into it. Here are a few more problems we tackled:

New forehead piece. While we enjoyed the flexibility of the welder’s headgear, it interfered with the optics bracket, preventing the optics from getting close enough. Because the forehead band sat so low, the welder’s headgear also required a top strap.

Our new headgear design sits higher and wider, taking on the role of the top strap while dispersing more weight. Choosing against a top strap was important to make it self-evident how the device is worn, making it more inviting and a more seamless experience. New users shouldn’t need help to put on the device.

Another problem with the previous designs was slide-away optics. The optics bracket would slide away from the face occasionally, especially if the user tried to look downward.

Now, in addition to the new forehead, brakes are mounted to each side of the headgear. The one-way brake mechanism allows the user to slide the headset towards their face, but not outwards without holding the brake release. The spring is strong enough to resist slipping – even when looking straight down – but can be easily defeated by simply pulling with medium force in case of emergency.

Weight, balance, and stiffness comes as a whole. Most of the North Star headset’s weight comes from the cables. Counterbalancing the weight of the optics by guiding the cables to the back is crucial for comfort, even if no weight is removed. Routing the cables evenly between left and right sides ensures the headset isn’t imbalanced.

By thickening certain areas and interlocking all the components, we stiffened the design so the whole structure acts cohesively. Now there is much less flexure throughout. Earlier prototypes included aluminum rods to stiffen the structure, but clever geometry and better print settings offered similar performance (with a few grams of weight saved)! Finally, instead of thread-forming screws, brass inserts were added for a more reliable and repeatable connection.

Interchangeable focal distances. Fixed focal distances are one of the leading limiting factors in current VR technology. Our eyes naturally change focus to accommodate the real world, while current VR tech renders everything to the same fixed focus. We spent considerable time determining where North Star’s focal distance should be set, and found that it depends on the application. Today we’re releasing two pairs of display mounts – one at 25cm (the same as previous releases) and the other at an arm length’s 75cm. Naturally 75cm is much more comfortable for content further away.

Finally, a little trick we developed for this headgear design: bending 3D prints. An ideal VR/AR headset is light yet strong, but 3D prints are anisotropic – strong in one direction, brittle in another. This means that printing large thin curves will likely result in breaks.

Instead, we printed most of the parts flat. While the plastic is still warm from the print bed, we drape the plastic over a mannequin head. A few seconds later, the plastic cools enough to retain the curved shape. The end result is very strong while using very little plastic.

While the bleeding edge of Project North Star development is in our San Francisco tech hub, the work of the open source community is a constant source of inspiration. With so many people independently 3D printing, adapting, and sharing our AR headset design, we can’t wait to see what you do next with Project North Star. You can download the latest designs from the.

This page was contributed by Guillermo Guillesanbri.

The Deck X headset uses the same headgear from release 3. The build process is documented below with photos.

This page was contributed by Guillermo Guillesanbri

If we take a look at the first page with instructions starting from the end of the document, we will find the 230-000 instructions.

These parts were updated not long ago, so if you have downloaded them recently, you should be missing the two cable-guides on the top of the picture above and have a reinforced version of 230-001/240-001.

When assembling the headgear, take into account that even if the assembly drawing doesn’t show it, you have to put the welding headgear between the hinge parts.

To put the spring in place, you will have to turn it 180 degrees loading it, this can be easily done by putting the spring in place in both parts and turning around one of them.

First, we need to bend the forehead headgear span, to do this, it’s recommended to turn the heated bed of your 3D printer to 70 °C, which is enough to shape the part if you don’t have access to a heated print bed, don’t worry, other options are:

Using a Heat Gun.

Using hot water.

Anything that gets the piece to ~70 °C.

You can now shape the piece with a mannequin head or your own head if you cover your skin to avoid first degree burns (not really, just be careful).

Once we have the piece bent, it’s time to wrap up the foam, to do so, I did this, I’m not 100% sure this is the best/correct way to do it, but it worked out fine for me. Take into account that the felt-like side is the smoothest one and should be the one in contact with your forehead.

Now, let’s put the hinges in place, again, remember to put pieces 230-000 and 240-000 between the two pieces of each hinge. I found it easier to lock in place the hinge base (shown in the picture below) and screw the cap on top.

You should have now the headgear assembled, just like the pictures below, with two differences, the reinforced parts and the absence of the cable guides.

This guide is also available as a PDF:

A video guide from Tasuku Takahashi is available below, it's best to supplement the written documentation with this video. If you run into problems or have questions, feel free to reach out on the discord.

Item No 6 (#230-004) of the Left Brake Assembly was removed and thus no longer packaged due to undesired cable catching. The new method of managing cables is by attaching a zip tie to the end cap.

This folder contains the mechanical assets necessary to build a Project North Star AR Headset with calibration stand.

Mechanical Release 3 bundles together all the lessons we learned into a new set of 3D files and drawings. Its main objective is to be more inviting, less hacked together, and more reliable. The design includes more adjustments and mechanisms for a larger variety of heads and facial geometries. Overall, the assembly is lighter, more balanced, and stiffer.The parts were designed for an FDM style 3D printer

This is a work in progress. This is not a finished guide, nor end-user friendly. Major sections are missing. Assembly requires care and patience. Nothing worth having is ever easy.

Open the and construct each sub-assembly as illustrated. A full list of the parts needed can be found in the . Not all sub-assemblies are required as there are multiple designs to choose from. Additionally, the CAD files are included in STEP format to help design new parts.

The headset consists of two basic sections: the optics and headgear . The optics subassembly currently has two variants: Release 3 optics and Update 3-1 (i.e. the simplified optics assembly). The headgear assembly utilizes the rear adjustment mechanism from a Miller branded welder’s helmet, but several models can be made to work.

Functionally, the two optics assemblies are the same. Release 3 is closer to the original design aesthetic and it’s marginally (8g) more lightweight. On the other hand, Update 3-1 halves the print time by removing the need for supports on the sides. Overall, the simplified 3-1 bracket is easier to print with lower print failures and stiffer too. It’s recommended to start with the simplified Release 3-1 optics assembly for the first build. See drawing for more information.

All development was done using filament. We've found it to be strong, easy to print, and have great surface finish.

A build plate of approximately 250x200mm is recommended for the largest parts

Parts may need to be rotated to align with print bed.

Several parts in the optics assembly use brass inserts for increased clamping load and the ability to swap out the components multiple times without wear. These inserts need to be heated above the plastic's melting point and pressed into the plastic. It's recommended to use an installation tip designed for brass inserts.

Demonstration of using a soldering iron to install brass inserts. The wire cutters are used to prevent the insert from pulling back out when not using an installation tip.

Parts that require inserts:

Installing brass inserts into the optics bracket is optional. They're intended as mounting points for future testing.

The headgear assembly includes parts that print flat but bend to fasten to each other. Although not necessary, it’s suggested to drape these parts around a form while the 3D print is still soft from the heated print bed. This minimizes strain inside the plastic and prolongs the life of the part.

Preheating the print bed to 70C softens a print enough to shape the part

The following instructions can be used for version 1 of the calibration rig

This page describes both existing calibration methods, as well as how to align the LeapMotion Controller properly.

Believe it or not, most hardware doesn't work perfectly the exact moment it's assembled. Assembling headsets by hand leads to small variances in each headset that need to be compensated for in software. In order to get the best experience with your ProjectNorthstar headset, you'll need to follow a few steps in order to compensate for these variances.

sudo apt install build-essential cmake libgl1-mesa-dev libvulkan-dev libx11-xcb-dev libxcb-dri2-0-dev libxcb-glx0-dev libxcb-icccm4-dev libxcb-keysyms1-dev libxcb-randr0-dev libxrandr-dev libxxf86vm-dev mesa-common-dev

git clone https://github.com/KhronosGroup/OpenXR-SDK.git

cd OpenXR-SDK

cmake . -G Ninja -DCMAKE_INSTALL_PREFIX=/usr -Bbuild

ninja -C build installsh

sudo add-apt-repository ppa:monado-xr/monado

sudo apt-get update

sudo apt install libopenxr-loader1 libopenxr-dev libopenxr-utils build-essential git wget unzip cmake meson ninja-build libeigen3-dev curl patch python3 pkg-config libx11-dev libx11-xcb-dev libxxf86vm-dev libxrandr-dev libxcb-randr0-dev libvulkan-dev glslang-tools

libglvnd-dev libgl1-mesa-dev ca-certificates libusb-1.0-0-dev libudev-dev libhidapi-dev libwayland-dev libuvc-dev libavcodec-dev libopencv-dev libv4l-dev

libcjson-dev libsdl2-dev libegl1-mesa-dev sh

mkdir -p ~/.config/monado

cp doc/example_configs/config_v0.json.northstar_lonestar ~/.config/monado/config_v0.jsonsh

rs-ar-advanced #test that t26x is working

sudo leapd

NS_CONFIG_PATH=$(pwd)/src/xrt/drivers/north_star/exampleconfigs/v2_deckx_50cm.json monado-service

hello_xr -g VulkanLow-cost alternative to original board, but with 85 – 90Hz maximum stable refresh rate

Support for audio output

USB 2 (High-Speed) passthrough to downstream hub or direct peripheral connection

Backlight brightness control (can be switched off entirely) with non-volatile settings. When you plug in headset, it has the same brightness as when you unplugged it. You can use a microcontroller to adjust the brightness, but you don’t need one connected for the display and backlight circuitry to work.

8-pin ribbon connector for future I2S stereo audio output breakout board

1x 12-pin ribbon connector for USB 2 with passthrough connection to display driver board’s backlight brightness control pins and one GPIO pin connection for switching power to one of the 6-pin ports (for resetting any finicky sensors with enumeration issues like the T261.)

Driver board Board dimensions:

USB hub speed: Max 20-30MB/s

Max useable USB2.0 high speed downstream ports: 4

4 Downstream ports, 3 with 6P Connector, 1 port with power switch controlled via the 4th port(12 Pin Connector)

USB Hub board dimension:

C:\Program Files (x86)\Steam\steamapps\common\SteamVR\driversvrpathreg adddriver <full_path_to>/resources/northstargit clone https://github.com/fuag15/project_northstar_openvr_drivercd project_northstar_openvr_drivermkdir buildcd buildcmake -G "Visual Studio 16 2019" -A x64 ..Affixing TWO of these Stereo Cameras to it: https://www.amazon.com/ELP- Industrial- Application- Synchronized- ELP- 960P2CAM- V90- VC/dp/B078TDLHCP/

Acquiring a large secondary monitor to use as the calibration target

Find the exact model and active area of the screen for this monitor; we'll need it in 3)

Print out an OpenCV calibration chessboard, and affixing it to a flat backing board.

Flatness is absolutely crucial for the calibration.

Editing the config variables at the top of dualStereoChessboardCalibration.py with the correct:

Number of interior corners on each axis

Dimensions of each square on the checkerboard (in meters!)

Install Python 3 on your machine and run pip install numpy and pip install opencv-contrib-python

Run it from the python scripts folder, usually something like C:\Users\*USERNAME*\AppData\Local\Programs\Python\Python36\Scripts

Running dualStereoChessboardCalibration.py

First, ensure that your upper stereo camera appears on top in the camera visualizer

If not, exit the program, and unplug/replug your cameras' USB ports in various orders/ports until they do.

You should have a good cameraCalibration.json file in the main folder (from the last step)

Ensure that your main monitor is 1920x1080 is and the Calibration Monitor appears to the left of the main monitor, and the north star display appears to the right of it.

This ensures that the automatic layouting algorithm detects the various monitors appropriately.

Edit config.json to have the active area for your calibration monitor you found earlier.

Download this version of the Leap Service:

The calibrator was built with this version; it will complain if you don't have it :/

Now Run the NorthStarCalibrator.exe

You should see the top camera's images in the top right, and the bottom camera's images on the bottom.

If this is not so, please reconnect your cameras until it is (same process as for the checkerboard script)

First, point the bare calibration rig toward the calibration monitor

Ensure it is roughly centered on the monitor, so it can see all of the vertical area.

Then Press "1) Align Monitor Transform"

This will attempt to localize the monitor in space relative to the calibration rig. This is important for the later steps.

Next, place the headset onto the calibration rig and press "2) Create Reflector Mask"

This should mask out all of the camera's FoV except the region where the screen and reflectors overlap the calibration monitor.

If it does not appear to do this, double check that all of the prior steps have been followed correctly...

Now, before we press "3) Toggle Optimization", we'll want to adjust the bottom two sliders until the both represent roughly equal brightnesses.

This is important since the optimizer is trying to create a configuration that yields a perfectly gray viewing area.

Now press "3) Toggle Optimization" and observe it.

It's switching between being valid for the upper and lower camera views, so only one image is going to appear to improve at a time.

You should it see it gradually discovering where the aligned camera location is.

This is the finickiest step in the process, it's possible that the headset is outside the standard build tolerances.

If it does converge on an aligned image, then congratulations! Toggle the optimization off again.

Press button 4) to hide the pattern, put the headset on, and use the arrow keys to adjust the view dependent/ergonomic distortion and the numpad 2,4,6,8 keys to adjust the rotation of the leap peripheral.

When satisfied, press 5) to Save the Calibration

This will save your calibration as a "Temp" calibration in the Calibrations Folder (a shortcut is available in the main folder).

You can differentiate between calibrations by the time in which they were created.

The first type of calibration you'll need to do is classified as Optical Calibration. This type of calibration uses stereo cameras to calculate the image distortion caused by the parabolic reflectors in the ProjectNorthstar headset. There are two versions of Optical Calibrations you can do based on what hardware you have access to. Both versions of optical calibration currently require a Calibration Stand to be 3D printed. The calibration methods distort the normal image displayed on the screens to make it appear correctly on the headset. Unity, unreal and other engines/compositors need to be able to take the information generated by the calibration method, and plug that into a rendering method in order to properly compute the image. Please see the table below for which methods are currently supported on specific platforms.